Building Machines that Learn and Think Like People (pt 3. Developmental Software)

01 Jan 2018 | reviewtags: cognitive-science, machine-learning, brain, deep-learning Note: Ideas and opinions that are my own and not of the article will be in an italicized grey.

Series Table of Contents

Part 1: Introduction and HistoryPart 2: Challenges for Building Human-Like Machines

Part 3: Developmental Software

Part 4: Learning as Rapid Model-Building

Part 5: Thinking Fast

Resources

Glossary

Article Table of Contents

| Intuitive Physics |

| Intuitive Psychology |

| Summary |

| References |

Neural Networks have the ability to learn rich, structured representations given abundant amounts of data, but their learning seems to be constrained (at least without infinite data) to what can be learned through pattern recognition. Increasingly, inductive biases endowed either by architectural choices or by algorithmic choices seem to be the key to effective learning with neural networks. However, to get human-level learning and thinking, there are still many core ingredients missing from neural networks. In order to gain insight into which inductive biases might be necessary for the brain, we can look at some core abilities manifest in humans by early childhood.

Cognitive scientists have found that early in development, humans have a strong understanding of several domains including numbers, physics, and psychology (Spelke & Kinzler, 2007). The authors refer to this as “developmental start-up software,” and claim that this likely plays an active and important role in producing human-like learning and thought in ways contemporary machine learning has yet to capture.

The process of developing cognitive representations for the domains mentioned can be seen as the development of relevant “intuitive theories” (Schulz, 2012). Experimental work with children shows the “child as a scientist” that learns about a topic (at least partially) akin to the scientific process: they seek out data that distinguishes hypotheses, isolate variables, and tests causal hypotheses (Cook et al., 2011). Childhood learning seems to resemble an active process of defining and testing intuitive theories about various aspects of the world.

Studies indicate that these domains (or at least methods of analysis and learning about the world) are shared cross-culturally and partly with non-human animals. Here, we focus on intuitive theories of physics and psychology.

Intuitive Physics

Researchers have found that at young ages, infants learn to incorporate physical characteristics into their representations of objects. For example, by 2 months, they expect inanimate objects to follow principles of persistence, continuity, cohesion, and solidity (Spelke, 1990); by 6 months, they have developed expectations for the movement and properties of rigid, soft, and liquid bodies (Rips & Hespos, 2015). Unfortunately, there is no agreement on the underlying computational principles that guide this phenomena (Baillargeon et al., 2009; Siegler & Chen, 1998).

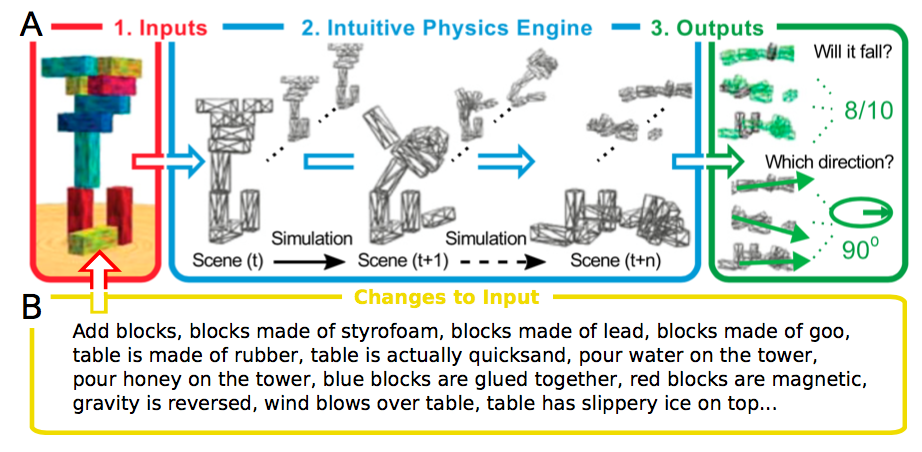

Recently, people have started to frame intuitive physics as inference over a physics “software engine”(Bates et al., 2015). For example, in experiments, researchers will literally simulate all or a subset of physical outcomes for a scenario using a physics engine and do inference on which is most likely. Physics engines have the desiderata that they’re oversimplified and incomplete, requiring probabilistic approximations of states–something humans likely do. Further, they seem to capture how humans make predictions and simulate hypothetical world events (Battaglia et al., 2013; Téglás et al., 2011).

It remains unclear whether physical properties can be embedded (implicitly or explicitly) into deep learning models. One successful attempt has been the PhysNet (Lerer et al., 2016), which learned to predict the stability of 2, 3, or 4 towers of blocks. Its performance matched human performance on real images, and exceeded it on synthetic images. However; it requires extensive training (hundreds of thousands of examples) and has limited generalization abilities.

A clear challenge is whether deep learning models can be made to generalize well without explicitly simulating causal interactions (i.e. containing causal models of the world). One possible method is to have the model emulate a physics simulator, where successive layers of abstraction hopefully learn successive high-level physics dynamics (e.g. distance, velocity, and acceleration). This would be akin to the way current models learn successive abstractions over images (where lower layers learn edges, successive layers learn textures, and even deeper layers learn objects). In deep reinforcement learning, this might enable models that are more robust to slight alterations in the testing data – something that now requires re-training.

Intuitive Psychology

Researchers have found that intuitions about other agents also emerge in infancy. For example, pre-verbal infants learn to distinguish inanimate objects from animate objects using low-level cues such as the presence of eyes or whether an object initiates movement from rest (Johnson et al., 1998).

Infants also expect agents to act contingently, to have goals, and to take efficient goals subject to constraints (Csibra, 2008). At just 3 months, infants are able to discriminate anti-social agents that hurt or hinder others from neutral agents. Soon thereafter, they learn to distinguish anti-social, neutral, and pro-social agents (Hamlin, 2013).

These "intuitive psychology" abilities are likely useful for AI agents learning to play games, and learning to model the behavior of other agents within the game. For example, an AI agent with these abilities can learn to distinguish animate objects from inanimate objects, categorize animate objects, treat them as other acting agents, and learn relevant corresponding attributes for animate-object categories (e.g. harmful/helpful, anti-social/pro-social).Cognitive scientists have tried to model social behavior in a rule-based manner (Schlottmann et al., 2013) but this is not robust to the many possibilities for how an agent can interpret highly variable scene settings. An alternate approach that is becoming increasingly popular is to model agents as having generative models (glossary) for the actions of others (Baker et al., 2009). Such a representation fits well with the example above where an agent learns to model animate objects in the world as other acting agents with attributes. Stereotyping the behavior of other agents based on their perceived category membership (i.e. assuming all agents that belong to a category perform the same actions) may then allow for quick reasoning about their actions.

Just as it is unclear whether deep learning systems can implicitly learn physical properties, it is unclear if they can learn high-level psychological representations for concepts such as “agents” and “goals” in their modern capacity. The endowment of such principles from intuitive psychology could, for example, allow for learning about a game by watching another agent playing it. If another agent consistently avoids a particular object, an AI can then infer that object is dangerous or “anti-social” without experiencing the consequences of that interaction.

Summary

Research indicates that there are a number of key skills manifest in infancy. It makes sense that these skills aid us in learning and utilizing new information quickly as we progress through life. Intuitive physics gives us a basis for reasoning about the physical world and intuitive psychology about the social world. However, it is unclear how to incorporate these skills into modern neural networks and endow them with the ability to learn and build on knowledge similarly to humans.

At a more fundamental level, from an early age, humans show the ability to seek out knowledge as they build internal models for the world. How these questions are devised, their answers found, and the subsequent knowledge stored and incorporated is unclear. Just as there is a concept of the “child as a scientist” actively learning about the world, can we have a “neural network as a scientist”?

References

- Lake, B. M., Ullman, T. D., Tenenbaum, J. B., & Gershman, S. J. (2016). Building Machines That Learn and Think Like People. The Behavioral and Brain Sciences, 40, 1–101.

- Schulz, L. (2012). The origins of inquiry: Inductive inference and exploration in early childhood. Trends in Cognitive Sciences, 16(7), 382–389.

- Spelke, E. S., & Kinzler, K. D. (2007). Core knowledge. Developmental Science, 10(1), 89–96.

- Cook, C., Goodman, N. D., & Schulz, L. E. (2011). Where science starts: Spontaneous experiments in preschoolers’ exploratory play. Cognition, 120(3), 341–349.

- Spelke, E. S. (1990). Principles of object perception. Cognitive Science, 14(1), 29–56.

- Rips, L. J., & Hespos, S. J. (2015). Divisions of the physical world: Concepts of objects and substances. Psychological Bulletin, 141(4), 786.

- Baillargeon, R., Li, J., Ng, W., & Yuan, S. (2009). An account of infants’ physical reasoning. Learning and the Infant Mind, 66–116.

- Siegler, R. S., & Chen, Z. (1998). Developmental differences in rule learning: A microgenetic analysis. Cognitive Psychology, 36(3), 273–310.

- Bates, C., Battaglia, P., Yildirim, I., & Tenenbaum, J. B. (2015). Humans predict liquid dynamics using probabilistic simulation. CogSci.

- Battaglia, P. W., Hamrick, J. B., & Tenenbaum, J. B. (2013). Simulation as an engine of physical scene understanding. Proceedings of the National Academy of Sciences, 110(45), 18327–18332.

- Téglás, E., Vul, E., Girotto, V., Gonzalez, M., Tenenbaum, J. B., & Bonatti, L. L. (2011). Pure reasoning in 12-month-old infants as probabilistic inference. Science (New York, N.Y.), 332(6033), 1054–1059.

- Lerer, A., Gross, S., & Fergus, R. (2016). Learning Physical Intuition of Block Towers by Example. ICML.

- Johnson, S., Slaughter, V., & Carey, S. (1998). Whose gaze will infants follow? The elicitation of gaze-following in 12-month-olds. Developmental Science, 1(2), 233–238.

- Csibra, G. (2008). Goal attribution to inanimate agents by 6.5-month-old infants. Cognition, 107(2), 705–717.

- Hamlin, J. K. (2013). Moral Judgment and Action in Preverbal Infants and Toddlers. Current Directions in Psychological Science, 22(3), 186–193.

- Schlottmann, A., Cole, K., Watts, R., & White, M. (2013). Domain-specific perceptual causality in children depends on the spatio-temporal configuration, not motion onset. Frontiers in Psychology, 4, 365.

- Baker, C. L., Saxe, R., & Tenenbaum, J. B. (2009). Action understanding as inverse planning. Cognition, 113(3), 329–349.