1University of Michigan 2DeepMind

ICML {Unsupervised RL, Real Life RL} Workshops, 2021

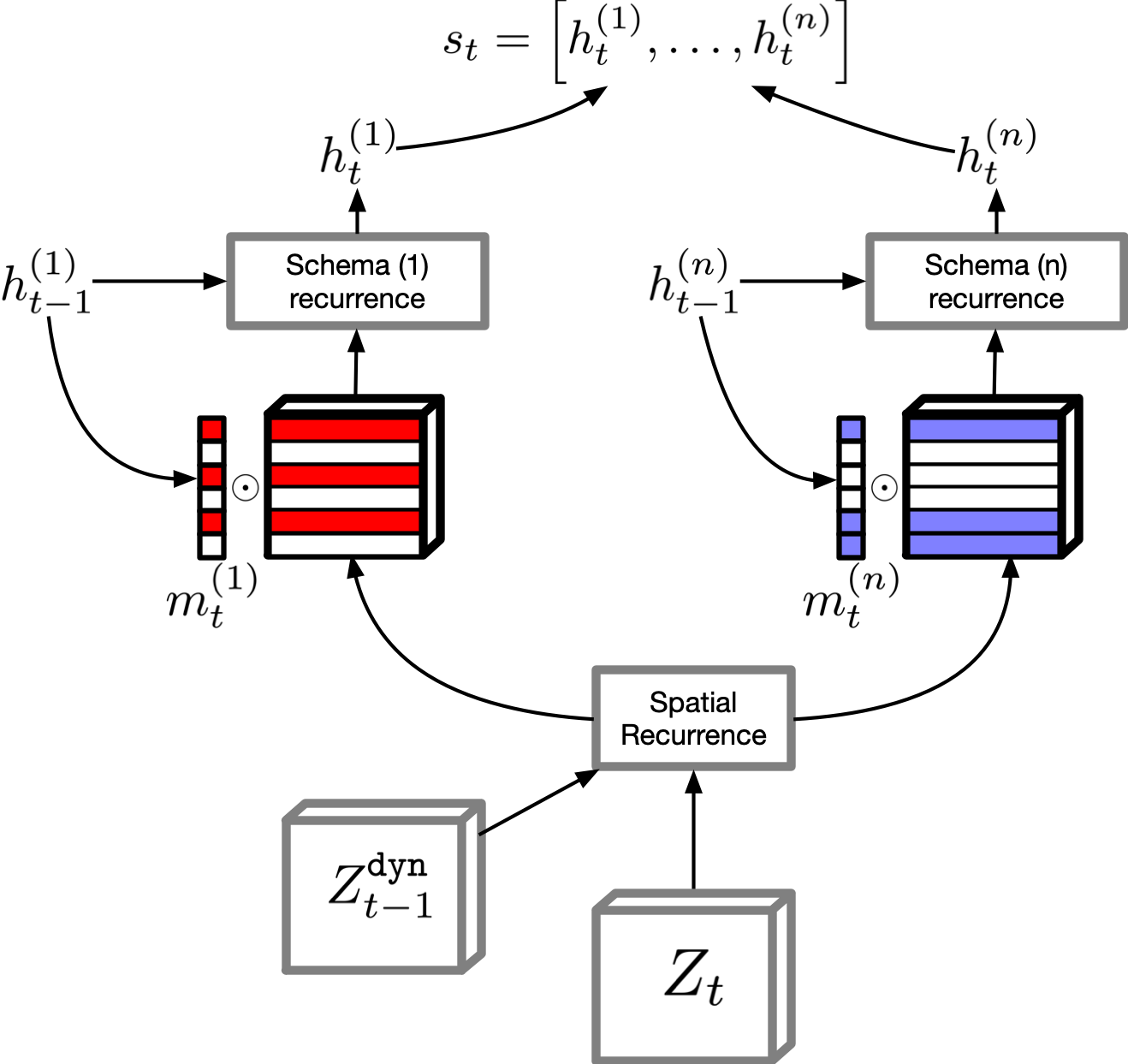

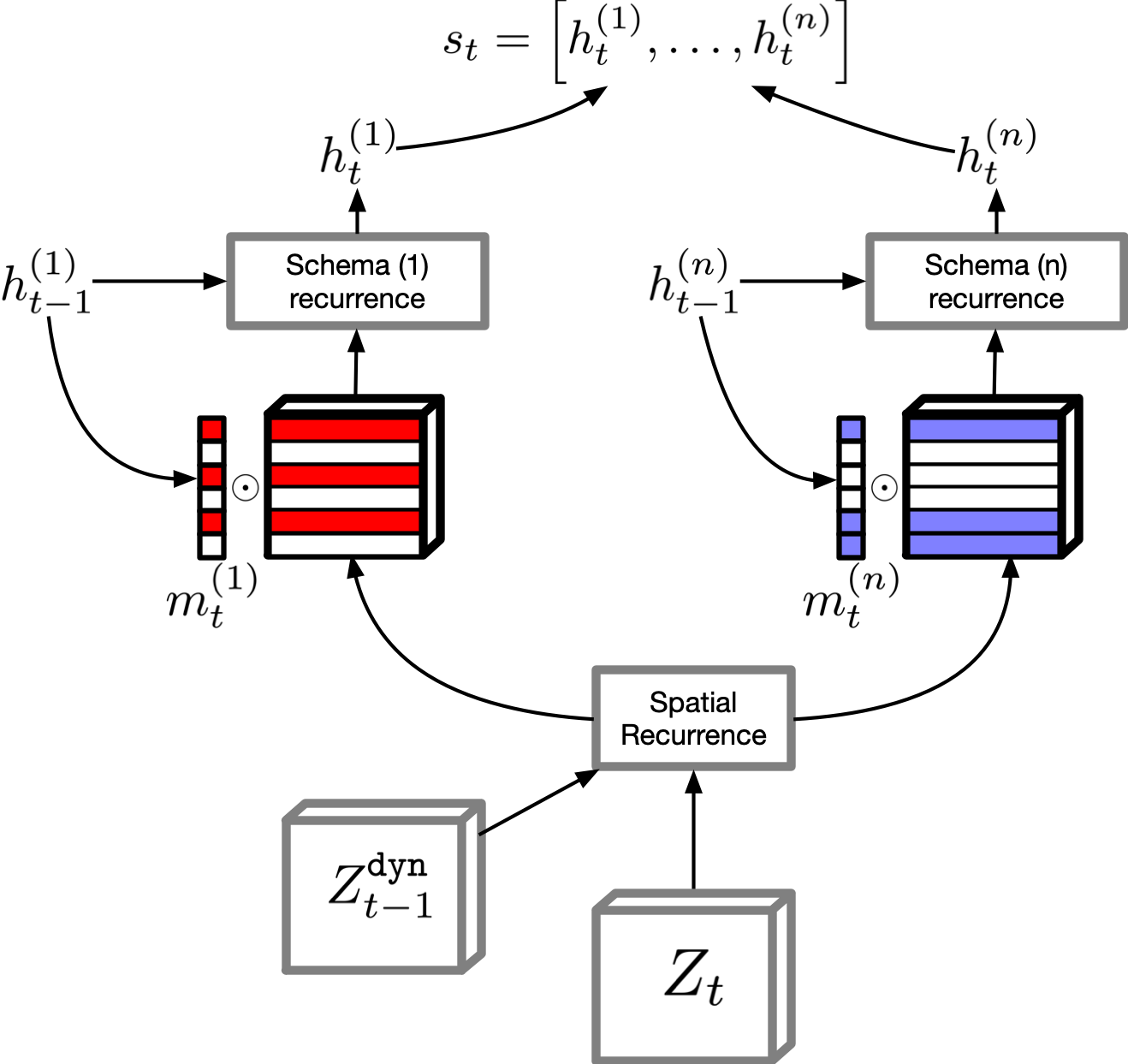

The real world is large and complex. It is filled with many objects besides those defined by a task and objects can move with their own interesting dynamics. How should an agent learn to represent state to support efficient learning and generalization in such an environment? In this work, we present a novel memory architecture, Perceptual Schemata, for learning and zero-shot generalization in environments that have many, potentially moving objects. Perceptual Schemata represents state using a combination of schema modules that each learn to attend to and maintain stateful representations of different subspaces of a spatio-temporal tensor describing the agent’s observations. We present empirical results that Perceptual Schemata enables a state representation that can maintain multiple objects observed in sequence with independent dynamics while an LSTM cannot. We additionally show that Perceptual Schemata can generalize more gracefully to larger environments with more distractor objects, while an LSTM quickly overfits to the training tasks.